What is the WP4 of the LIS4.0 project about? Which are the challenges it must face?

The WP4 of the LIS4.0 project deals with developing and applying innovative technologies for the sustainable mobility of people. In particular, the research aims to design Advanced Driver-Assistance Systems (ADAS) and systems for Connected Automated Driving (CAD) by developing automation algorithms and communication systems among vehicles and infrastructures (V2X). At the same time, the WP4 aims to analyse and verify the impact of those innovative technologies implemented in vehicle construction as well as consider the possibility of introducing new materials with a better weight-resistance ratio and improved level of energy absorption. All WP4 activities are carried out keeping in mind the central role of people, which translates into paying attention to comfort and developing HMI interfaces.

All WP4 activities revolve around a demonstrator vehicle: a small zero-emission eclectic bus to transfer about ten people currently being turned into a self-driving urban shuttle. The objectives of the project are various and ambitious. And so are the challenges it faces. First of all, we are developing the architecture of the control system of the vehicle based on the implementation of dynamics leading progressively to the complete automation of the driving operations of the vehicle. These are typical features of V2X connectivity here included. Secondly, we are implementing mathematical models developed to evaluate how the installed lightened components affect both the performance and battery life of the vehicle and the effect on passive safety of materials with a higher level of deformation energy absorption. Finally, we are creating an innovative human-machine interface for users to build their trust in the autonomous driving system because it shares the information used to move the vehicle around and communicates the actions to take every step of the way.

Which communication/connectivity and localisation solutions are required to support the approach to control a self-driving system?

This question has no easy answer. At the industrial and academic level coexist diverse visions and ideas on the matter. Many research groups and companies are working merely on video-only localisation systems (mainly to reduce the costs). Instead, others use an exaggeration of sensors along with cameras (lidar, radar, ultrasound), which results in having to process a higher quantity of data and perform sensor-fusions to obtain more reliable results. Moreover, both the scientific and industrial communities are divided on connectivity as well. Some research groups support high-reliable V2I and V2V connections, for example through the 5G network, which they believe are essential to reach safely the maximum level of automation. Some others believe the vehicle must be as completely autonomous, at least just as human pilots are, to limit all cybersecurity risks. As per what was just described, our prototype is overloaded with sensors. Consequently, it has just as many opportunities to receive and send data with the goal to compare and evaluate several solutions to implement.

Other than improving safety, what are other human-machine interaction aspects you are now investigating to allow the most natural integration between users and external subjects?

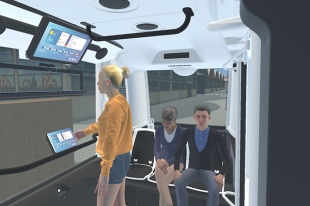

Interaction with a self-driving vehicle used as public transportation poses many questions about if and how users can ask for information and give orders. In conventional vehicles, the driver is the person in charge of carrying out those activities. But in cases where the driver is missing, problems might occur and a self-driving vehicle can become dangerous during emergencies. Moreover, it is to remember that public transportation users may have different needs and require specific support. Therefore, we developed an interface able to address the requests of diverse users, meaning having to implement an interaction system that stimulates several human sensorial aspects. The developed system exploits mainly communication via audio-video channels but it is integrated with a contactless device with ultrasonic tactile feedback that activates features of the vehicle by recognising specific hand gestures. As well as the interaction inside the vehicle, other ways to communicate with the external environments got explored. In fact, it is vital the vehicle is able to clearly show its intention of movement and warn users when in danger. In this case, the decision was to use the devices already installed on the vehicle but with their behaviour conveniently encoded.

During the activities, how did you combine physical experiments with the development through environment simulations?

The main three WP4 macro-activities follow different paths on the one hand linked to their features. And, on the other, linked to the health situation started in 2020 that forced us to anticipate some numerical activities over experiments. The activities related to the use of innovative technologies on the vehicle are carried out exclusively via numerical simulations: we developed a modular model of the shuttle, including eclectic motor, batteries and speed control logic to foresee its battery life according to the overall mass and mission, defined in terms of distance and travel speed between each stop. The other two activities logically aim to build the prototype complete with all the planned features. The control system of the bus was defined and tested through a simulator. Tests were carried out to collect signals from the installed sensors (camera, lidar, GPS), integrated with other sensor-collected information to recreate the environment where it moves around. At the moment, we are working on a portable traffic light network to bring on-site to test how the vehicle and the infrastructure communicate. About human-machine interaction, the whole interaction system was implemented and validated through a virtual reality environment. VR allowed us to explore different technical solutions to identify the one that better suits the physical prototype. The fully-developed interaction system will be implemented on the vehicle during the following months to be validated on-site later.

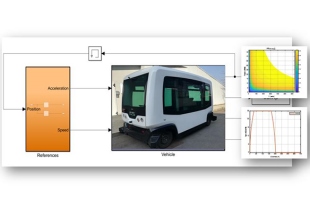

Which are the most relevant results of your activities on self-driving dynamics implemented on the experimental platform built with the simulator of the LIS4.0 project?

We are currently designing a modular computing system to collect information from on-board sensors of the prototype and report the results to a referral system fixed on the vehicle frame. This architecture allows the different elaboration systems on the vehicle to constantly access and elaborate information according to what is needed. We also created a digital communication system between the control computer and PLC system on-board to enable the movement of the bus via bidirectional CAN communication. On the other hand, we are currently developing a communication system between the architecture of the prototype and an external infrastructure via mobile data (currently 4G) to make information from the environment available to the vehicle. We are now also implementing the control dynamics designed via simulation to the platform on the existing vehicle: this will enable us to improve the perception of the surrounding environment, decide which actions to take and send new control inputs to the actuating system.